Table of Contents

Implementation Quality Assurance: Advanced Technical Audit for Production Readiness

A practical framework for assessing whether delivered AI systems are fit for sustained, dependable production use. This discipline bridges the gap between "done" and "deployable" through systematic quality assurance and pre-deployment diligence.

Executive Summary

The Issue: AI delivery models dangerously equate "done" with "deployable." Systems declared complete in controlled settings often degrade silently in production, breaking on unexpected formats, collapsing under live traffic, and failing without diagnostic visibility.

The Fix: Implementation Quality Assurance: systematic technical audit across five dimensions (Architecture, Testing, Metrics, Monitoring, Risk Coverage) that proves systems can fail predictably, report honestly, recover reliably, and evolve safely before deployment, not after damage.

Introduction

AI systems are often declared complete when they pass tests, meet deadlines, and demonstrate results in controlled settings. But the conditions under which those systems were evaluated rarely resemble the complexity of real production.

This is where risk accumulates, not in dramatic failure, but in slow, silent breakdowns:

These are not rare occurrences. They are common outcomes of delivery models that equate "done" with "deployable".

Implementation Quality Assurance exists to challenge that assumption.

Here we focus on a specific discipline: how to assess whether a delivered AI system, from any source, is fit for sustained, dependable production use.

Whether the system was built in-house, delivered by a vendor, or acquired through partnership, it must be tested not just for correctness, but for robustness, observability, scalability, and risk posture.

We extend the themes explored in AI Assurance and AI Governance, but sharpen them around one practical question:

Is this system ready for real-world operation, or just ready for sign-off?

You'll see how we evaluate systems across five key dimensions:

- Architecture

- Testing coverage

- Performance metrics

- Monitoring infrastructure

- Failure and recovery readiness

This is not audit for its own sake. It is a safeguard against deploying confidence that hasn't been earned.

What Real Readiness Looks Like

Production readiness is often declared, rarely verified.

In many AI projects, "readiness" means the system functions as expected, passes its tests, and looks good in a demo. But these tests are usually scoped around success paths, the ideal workflows, on clean data, under assumed conditions.

Real-world systems aren't consumed under ideal conditions.

Live environments introduce variability, concurrency, system interdependencies, edge cases, user unpredictability, and worst of all: silence. Most AI systems don't crash when they fail. They degrade quietly. They make incorrect inferences. They reroute data improperly. They exhaust resources unexpectedly. And they do all of this while still returning outputs that look plausible.

What We See Too Often

Across internal builds, vendor engagements, and AI consultancies, common readiness oversights include:

These omissions don't require incompetence, or bad intentions. They often arise from project incentives: deliver the scope, pass the test, meet the deadline.

But that model no longer works for AI systems. These systems don't just have traditional failure modes; they evolve new ones. And they don't just deliver outputs, they make decisions that affect operations, customers, compliance, and reputation.

What Readiness Actually Means

We define implementation readiness not by what has been built, but by what has been proven under pressure.

A production-ready system demonstrates:

None of this needs to be elaborate. But it must exist. If you cannot answer how the system performs under stress, what it does when it fails, and who's responsible when it degrades, it isn't ready.

Why This Matters Now

AI adoption is entering a second phase. The first was about experimentation, proofs of concept, innovation showcases, and early integration. The second is about production, sustained value delivery, at scale, under real-world constraints.

Without quality assurance at implementation level, organisations move forward with systems that may succeed in demo environments but cannot survive contact with reality.

Implementation QA is not about finding problems. It's about surfacing the truth of the system before the users, regulators, or press do.

The Five QA Dimensions

A system that's done isn't necessarily durable. That's why we evaluate production readiness across five dimensions. Each one reveals a different surface where risk hides and where quality can be made visible.

These aren't arbitrary categories. They reflect where AI systems most commonly fail after sign-off and where quality assurance, when done properly, can prevent real-world damage.

1. Architecture

Most AI systems are built to function. Fewer are built to function reliably under real-world pressure.

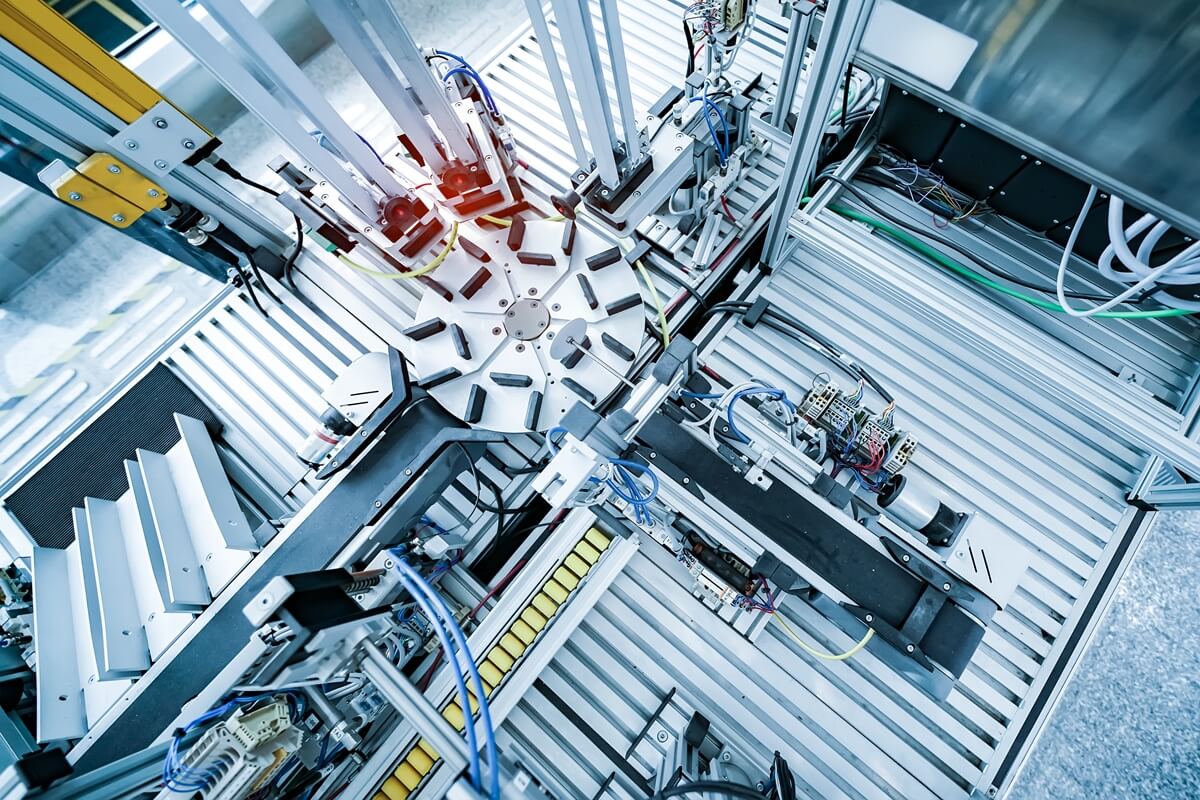

This dimension assesses system structure: how components interact, scale, fail, and recover. Are resources managed under concurrency? Is inference isolated from pipeline failures? Are retries safe or do they cause cascading errors?

Red Flags include monolithic design, resource spikes under load, no separation between critical and non-critical paths, and no capacity planning.

Good architecture anticipates entropy. It's observable, modular, and designed to survive when things go wrong, not just to succeed when things go right.

2. Testing

Most AI testing focuses on whether the model behaves as expected. But in production, the model is just one part of the system, and the test suite must reflect that.

We look for test coverage across interfaces, failure cases, input anomalies, degraded services, and unexpected user behaviour.

Immature systems only test their "happy path". Mature systems simulate the chaotic, unpredictable conditions that real environments introduce.

Quality assurance means more than passing tests. It means the right things were tested, under the right conditions, with credible evidence of what passed and what failed.

3. Metrics

Benchmarks are easy to manipulate. Metrics can be cherry-picked. In AI systems, this problem is amplified because performance depends on data, usage, concurrency, and drift.

This dimension validates what's being measured, how, and whether the results hold up under production conditions.

Key indicators:

Without reliable metrics, you're navigating by story, not signal. Production readiness demands truth in numbers.

4. Monitoring

Once deployed, AI systems rarely crash. They degrade.

This dimension asks: how observable is the system after go-live?

We look for:

Without monitoring, failure is discovered when users complain or auditors call. Monitoring isn't a luxury; it's the diagnostic layer that separates accountable systems from black boxes.

5. Risk Coverage: What Happens When It Breaks?

Every system has failure modes. This dimension evaluates whether they've been acknowledged and planned for.

True readiness doesn't mean perfect reliability. It means known failure states, bounded consequences, and recovery paths that don't rely on improvisation.

Systems that cannot fail safely cannot be trusted no matter how well they perform on day one.

How to Audit Without Paralysis

Quality assurance doesn't mean stopping everything. It means asking the right questions, at the right time, to reveal what might fail when it's too late to fix.

Some organisations fear that audits will delay delivery or generate endless rework. That's a reasonable concern, when audits are bureaucratic, unfocused, or purely retrospective. But that's not what we're advocating.

A good QA process surfaces dealbreakers early, constructively, and without ambiguity.

It's not about creating friction. It's about revealing invisible assumptions before they become expensive errors.

The Right Questions at the Right Time

Here's what effective, lightweight QA looks like:

Architecture

Testing

Metrics

Monitoring

Risk

You don't need 400 checklist items. You need a sharp lens.

Who Does This?

Implementation QA doesn't need a new department. It needs someone who understands what real-world systems look like and who knows how to ask hard questions without slowing the team down.

That might be:

What matters is that someone looks at the system as it will be lived with, not just as it was delivered.

Why It Works

The point of this audit is not to penalise teams. It's to protect sponsors, protect users, and protect the business from false certainty.

AI systems make decisions. They affect outcomes. When they fail, they don't just stop working, they work badly, quietly, and for far too long before anyone notices.

A short, clear-sighted QA process is how you prove that what's been built can survive what's coming.

If that confidence isn't earned, demonstrated, it shouldn't be deployed.

Pre-Deployment Diligence

Implementation QA doesn't start at handover. It begins earlier, when the system is being pitched, positioned, or proposed. This is the moment where confidence is highest and due diligence is most needed.

Many AI systems are accepted on the basis of claims: proprietary algorithm, scalable architecture, enterprise-grade design, responsible AI. But unless these are interrogated before go-live, you're not just buying the system you're inheriting its blind spots.

Here's what real diligence looks like:

Algorithmic Differentiation

Is the model genuinely novel or just a remix of standard techniques? Can its performance be reproduced using public tools? Proprietary doesn't mean valuable, and most AI IP isn't defensible unless it's tied to unique data, architecture, or operational advantage.

Architecture at Scale

Has the system been load-tested? Can it support concurrency? Are there diagrams, failover models, retry logic, and isolation of failure domains? The absence of an outage plan is a signal, not of confidence, but of inexperience.

Integration Readiness

Is this a boxed model, or a system that can be governed, observed, and maintained within your environment? Systems that "only run on our stack" or "require bespoke infrastructure" are not enterprise-ready, they're isolation-ready.

Team Capability

Who built it? Can they support it? Do they understand production, not just prototypes? A team that cannot explain its own failure cases, rollback plan, or monitoring architecture is not yet ready to support you even if their demo runs smoothly.

Governance and Risk

Can model decisions be explained? Are inference logs versioned and auditable? Has bias been tested, drift monitored, fallback logic validated?

These aren't just technical niceties, they're the conditions under which trust, scale, and value become possible. If any of them are unclear, undocumented, or evasively answered, that's not a gap to be fixed later. That's a risk to be priced now or walked away from.

Pre-deployment diligence isn't about doubt. It's about discipline. The cost of skipping it is always paid, but rarely by the team that made the promise.

Operating After Deployment

For many teams, deployment marks the end of the project. For serious systems, it's only the beginning.

A truly production-ready AI system must include not just the logic of its current behaviour, but the capability to evolve safely. That means managing upgrades, patches, model replacements, and rollback scenarios without creating new failure modes or exposing the business to silent regressions.

Deployment is not an act. It is a lifecycle.

What Maturity Looks Like

In a well-prepared system, you'll find:

And perhaps most importantly:

Ownership of Upgrade Discipline: Someone is accountable for versioning, promotion, evaluation, and rollback. It is part of the process, not improvisation.

What Immaturity Looks Like

In contrast, immature systems often exhibit:

These systems may pass all functional tests. But they cannot evolve safely, and every update becomes a strategic risk.

Why This Matters

AI systems are not static. Models degrade. Inputs change. Business goals evolve. What works today may become toxic in six months, not because of error, but because of entropy.

This means your quality assurance process must evaluate not just "Is it good now?" but:

The answers to these questions separate products from prototypes, and serious delivery from well-intentioned risk.

Conclusion: Why This Audit Protects Your Strategy

It's easy to confuse progress with readiness. A working model, a successful pilot, a green test suite, these are all necessary milestones, but they are not proof of sustainability.

AI systems don't fail like traditional systems. They don't stop; they drift. They don't crash; they degrade. And the consequences aren't always visible until reputational, financial, or regulatory damage has already occurred.

This is why Implementation Quality Assurance exists.

It is not about bureaucracy. It is about clarity.

Not about criticism. About confidence.

It protects leaders from signing off on something that only looks complete. It protects programmes from deploying fragile solutions in complex environments. And it protects users, clients, and citizens from systems that can't be governed once they're live.

A proper QA discipline helps organisations:

This doesn't require heavy process. But it does require experienced judgment and the courage to pause when something critical is unclear.

You don't need perfect systems. You need systems that:

If you can't see those capabilities clearly, then the system isn't ready for Production, no matter how confidently it was delivered. Implementation QA is how you prove that confidence has been earned.

Ultimately, Implementation Quality Assurance is not a brake on your AI strategy. It is the control system that makes scale possible. It enables faster, safer deployment by ensuring that every system released into production can be trusted to perform, evolve, and recover. With this discipline in place, you gain the confidence to deliver more ambitious capabilities, reduce exposure, and build a portfolio of systems that compound value over time.